HOME

ABOUT

CONTACT

HOME

ABOUT

CONTACT

Before diving into CUDA kernel design, understanding your GPU’s device specifications is an essential prerequisite.

This post documents how to retrieve GPU device information using the CUDA Driver API, and highlights which data points are worth checking.

Github:

CUDA provides two main APIs:

1. CUDA Runtime API

2. CUDA Driver API

If your goal is to query low-level, hardware-specific details, you’ll want to become comfortable with the CUDA Driver API.

Note: If you’re using Visual Studio, you need to do two things before calling any CUDA Driver API functions:

1. Include the CUDA headers (cuda.h) in your project.

2. Link against the CUDA libraries (usually done automatically if you use the CUDA Visual Studio integration).

- Topbar → Project → Properties → Linker → Input → Additional Dependencies → add cuda.lib

In this example, we’ll query the following device attributes:

1. Driver API Versions

2. Maximum shared memory per block

3. Maximum registers per block

4. Maximum threads per block

5. Number of multiprocessors

6. GPU compute capability version

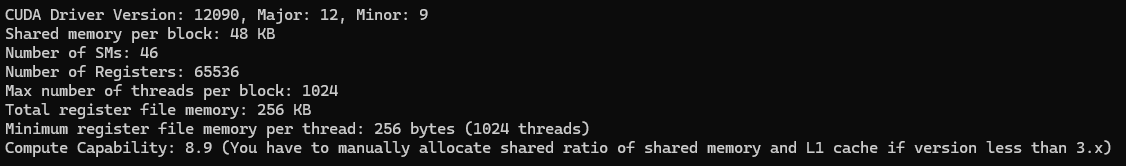

Using an NVIDIA GeForce RTX 4070 as an example, here’s the type of output you can expect:

For most device-related information, the go-to function is:

CUresult cuDeviceGetAttribute(int *pi, CUdevice_attribute attrib, CUdevice dev);

This single function can fetch nearly all the data we’re interested in. Below is a breakdown of each attribute.

1. Driver API Version

When setting up a GPU server or developing GPU-related applications, knowing the driver API version can help verify if your program can compile and run as expected on the target environment.

2. Shared Memory

Shared memory is a critical resource in CUDA kernel design, playing a key role in performance optimization. On many GPUs, shared memory and L1 cache share the same physical space. For example, if the GPU’s shared memory is reported as 48 KB, it’s likely the L1 cache is around 16 KB.

__shared__ float buffer[256];

__global__ void myKernel(...) {

extern __shared__ float dynamicBuffer[];

// Use dynamicBuffer here

}

size_t sharedMemBytes = 1024; // 1 KB

myKernel<<>>();

3. Register Count

Registers are used to store per-thread variables, and the number of registers impacts how much register file memory is available. Each register typically holds 32 bits. Having this number helps you reason about kernel resource allocation and occupancy.

4. Maximum Threads per Block

While the maximum threads per block is often 1024, choosing the wrong value can hurt performance:

5. Number of Multiprocessors

More multiprocessors generally mean higher potential throughput, but performance still depends on kernel design and memory usage.

6. Compute Capability Version

The compute capability determines hardware features. For example, devices with compute capability lower than 3.x may require manual tuning of the shared memory-to-L1 cache ratio.

By understanding these device-level parameters, you can make more informed decisions during CUDA kernel design, ensuring better performance and resource utilization.

Last updated: